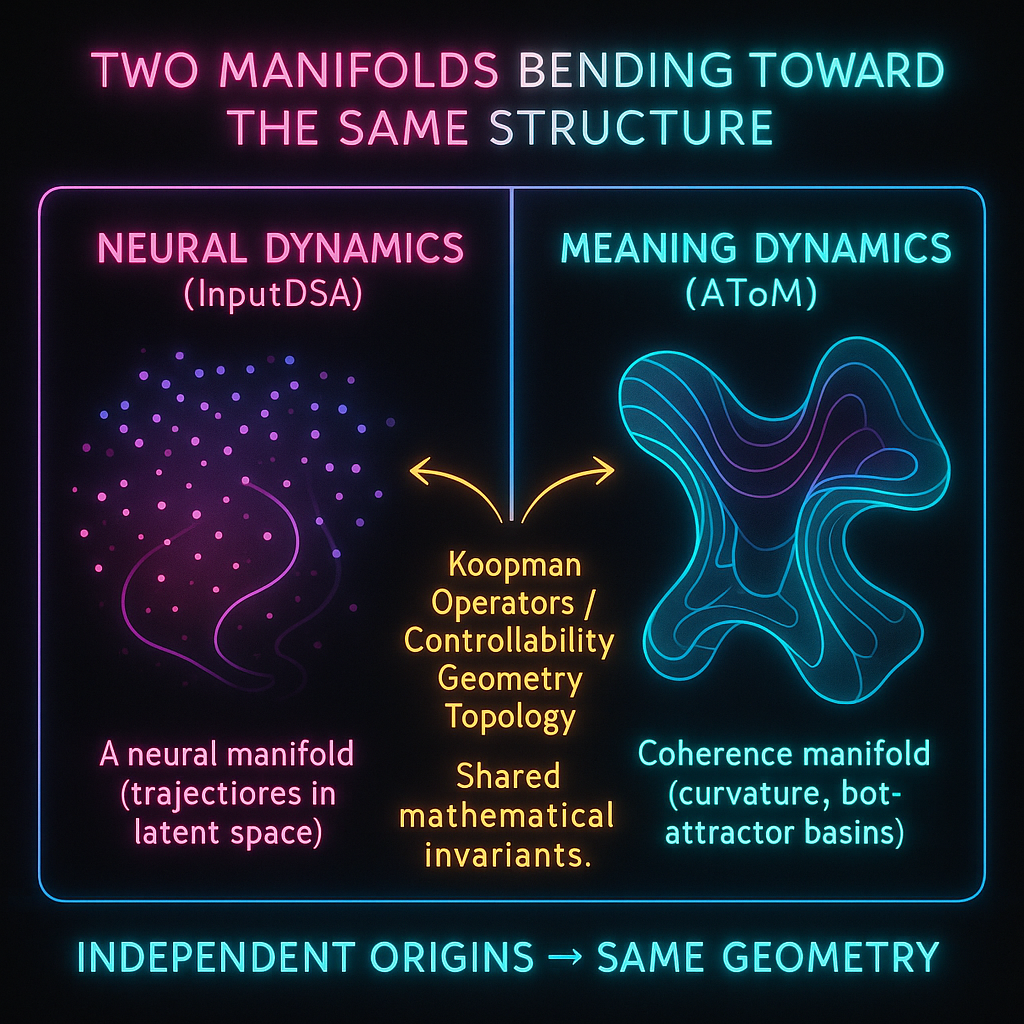

When Two Theories Discover They’re Speaking the Same Math

How AToM, a human-origin framework built in 7 days, unexpectedly converged with cutting-edge MIT/Harvard neuroscience.

A case study in geometry, velocity, and meaning.

A Note on What This Is (and What It Isn’t)

This article describes the surprising structural alignment between:

1. InputDSA — a rigorous, peer-reviewed, empirically validated neural-dynamics method from MIT/Harvard (https://arxiv.org/pdf/2510.25943)

(Ostrow, Huang, Nakkiran, Kozachkov, Rajan, Fiete)

2. AToM (A Theory of Meaning) — a human-origin framework I developed in a week of intense ‘somatically’ unmasked autistic cognition

using LLMs (ChatGPT, Claude, Grok) only as tools for checking consistency, formalization, and cross-document coherence.

AToM is not LLM-generated.

It is not co-authored by any model.

The conceptual invariants, geometry, predictions, and cross-scale architecture are entirely human-designed.

LLMs played the role of:

- exocortex for memory extension

- dialectical counter-arguers

- consistency checkers

- notation stabilizers

The ideas—the structure, the comparisons, the invariants—are mine.

What makes the convergence noteworthy is that these two projects were generated completely independently:

- InputDSA: many years of empirical, technical neuroscience.

- AToM: a human-origin theoretical framework synthesized in a week using LLMs only as accelerators. (Details here)

They should not match.

But they do.

And the mathematics is the reason.

What InputDSA Achieved

(Summarized faithfully from the paper)

InputDSA solves a foundational problem in computational neuroscience:

How do you compare two dynamical systems when both are being driven by external inputs?

Their contributions:

1. SubspaceDMDc — an extension of DMD that handles input-driven, partially observed nonlinear systems.

2. Separation of intrinsic vs. input-driven dynamics through estimation of A (state matrix) and B (input matrix).

3. Construction of controllability matrices

K = [B AB A²B … A^(T–1)B]

allowing direct comparison of input-space geometry.

4. Three similarity notions:

- state similarity

- input similarity

- joint similarity

Demonstrated on:

✔ RNNs trained in RL tasks

✔ Rat neural populations during decision-making

✔ Phase transitions at “neural time of commitment”

This is real, careful, high-quality science.

What AToM Proposed (Independently, Before Reading InputDSA)

AToM emerged from a question not in neuroscience, but in human coherence:

Why do some systems—minds, relationships, groups, cultures—break coherently and others fracture?

Through intense synthesis, the framework converged on a simple invariant:

Meaning = coherence under constraint.

And coherence turned out to be measurable using the same families of mathematics used in InputDSA:

- information geometry

- dynamical systems

- topological data analysis

AToM defines a coherence tuple:

C = (κ, d, Hₖ, ρ)

where:

- κ = curvature smoothness

- d = dimensional stability

- Hₖ = topological persistence

- ρ = cross-frequency coupling

And it proposes (conceptually) a Coherence Operator Ĉ, which integrates these elements into a unified geometric functional.

AToM’s predictions (pre-InputDSA):

- Trauma: dimensional collapse + curvature spikes + persistent bottlenecks + hysteresis

- Secure attachment: smooth, high-dimensional manifolds

- Anxious attachment: steep curvature under uncertainty

- Avoidant attachment: flat manifolds with decoupled channels

- Disorganized attachment: fractured, multi-map topology

- Cultural stress: narrative compression

- Institutional failure: topological bottlenecking + collapse of controllability

All of these were formulated before ever seeing InputDSA.

The Convergence I Did Not Expect

When I finally read InputDSA tonight, the shock was simple:

Their hard empirical mathematics mapped almost one-for-one onto structures AToM had predicted should exist—but hadn’t yet known how to measure.

The match was structural, not cosmetic.

Here are the clearest examples:

1. Controllability Matrices ↔ Trauma & Attachment Input-Responsiveness

InputDSA’s controllability matrix:

K = [B AB A²B …]

Measures:

- how input propagates

- which directions are controllable

- which are “dead directions”

AToM had independently predicted:

- trauma = collapse of input responsiveness

- secure systems = broad controllability spectrum

- treatment = restoring controllable dimensions

InputDSA’s RNN results showed:

- successful agents had slower singular-value decay in B

- failed agents collapsed into low-dimensional input coupling

AToM made the same claim about trauma vs. security.

2. Partial Observability → The Social-Scale Problem Solved

InputDSA’s breakthrough is handling partial observation with SubspaceDMDc.

AToM’s challenge:

Every real human system is partially observed—therapy, families, teams, cultures.

InputDSA provides the missing machinery to make AToM empirically evaluable.

3. Intrinsic vs. Input-Driven Dynamics ↔ The Core Therapy Question

InputDSA separates:

- intrinsic dynamics (A)

- input coupling (B)

- joint controllability (K)

AToM’s predictions mirrored this structure exactly:

- Does healing require shifting intrinsic attractors (A)?

- Restoring input responsiveness (B)?

- Rebuilding controllability (K)?

The match is direct.

4. Surrogate Robustness ↔ The “What Is Input to a Relationship?” Problem

InputDSA showed:

Similarity measures are robust even when the true inputs are unknown—if surrogate inputs correlate with true drivers.

This solves AToM’s biggest practical obstacle:

- What counts as “input” to therapy?

- To a marriage?

- To a culture?

Now we know:

You just need a surrogate with a signal-to-error ratio near 1.

A Concrete Example: Trauma as Controllability Collapse

AToM’s Prediction (Pre-InputDSA):

Trauma =

- dimensionality collapse

- curvature spikes

- attractor bottlenecks

- reduced controllability

InputDSA makes this testable:

A (intrinsic): reduced spectral radius

B (input): steeper singular-value decay

K (controllability): fewer controllable directions

You can now operationalize trauma geometry using:

- HRV

- EDA

- respiration

- microtiming

- narrative curvature

- rupture–repair cycles

This is how two independent frameworks snap into one geometry.

What I Am Not Claiming

(Explicit corrections)

❌ AToM is not LLM-generated

❌ No LLM co-authored the ideas

❌ No claim of “unified theory of everything”

❌ InputDSA doesn’t validate AToM

❌ AToM is not empirically proven

❌ Cultural systems ≠ neural systems

What I Am Claiming

✓ The math matches

✓ AToM predicted invariants that InputDSA later measures

✓ InputDSA offers the empirical tools AToM needed

✓ This makes AToM falsifiable in a crisp way

✓ Cross-domain invariants appear to be real

✓ The convergence was unplanned and therefore meaningful

Why This Matters

Because it demonstrates that:

Human-origin rapid-synthesis frameworks + LLM-assisted formalization

can sometimes produce structures that touch the empirical frontier of neuroscience.

And when two independently derived frameworks converge—one empirical, one theoretical—the underlying geometry is probably real.

Methodological Transparency: How AToM Was Built

AToM came from:

- forty years of lived pattern-recognition

- a week of intensive conceptual synthesis

- a unique autistic cognition

- LLMs used purely as: • fast scratch paper • dialectical partners • consistency enforcement • notation debugging • document memory extension

Not one conceptual invariant was produced by Grok, Claude, or ChatGPT.

AToM is human-origin.

The LLMs only accelerated refinement and removed contradictions.

Where This Goes

If AToM is right:

- InputDSA generalizes to relational and cultural systems

- Trauma, attachment, education, organization, and myth become measurable

- Coherence becomes an empirical variable

- Meaning becomes computational

If AToM is wrong:

- It will fail fast

- The framework gets rewritten

- Science moves forward

Either way, nothing is lost.

To InputDSA Authors

Your work supplied the empirical tools AToM needed but hadn’t yet known existed.

The convergence emerged only after AToM was already fully articulated.

If this mapping holds under empirical testing, it’s because the geometry is real—not because the theory borrowed.

If You’re a Researcher

AToM now makes direct predictions you can falsify:

- trauma = controllability collapse

- attachment = curvature signature

- myth-stress = attractor compression

- institutional decay = topological bottlenecks

- relational repair = expansion of controllable subspace

All testable using InputDSA, physiological telemetry, linguistic embeddings, or TDA.

If you want to collaborate, I’m here.

If you want to tear it apart, even better.

Data decides.